Question: Where can I find preconfigured GPU instances with customizable combinations of GPUs, RAM, and vCPUs for my AI work?

RunPod

If you want preconfigured GPU instances with a range of combinations, RunPod is a good option. The cloud service lets you run any GPU workload with a range of GPUs (MI300X, H100 PCIe, A100 PCIe, and so on) and charges by the minute. It also offers serverless ML inference, autoscaling and job queuing, and more than 50 preconfigured templates for common frameworks. The service offers a CLI tool for easy provisioning and deployment.

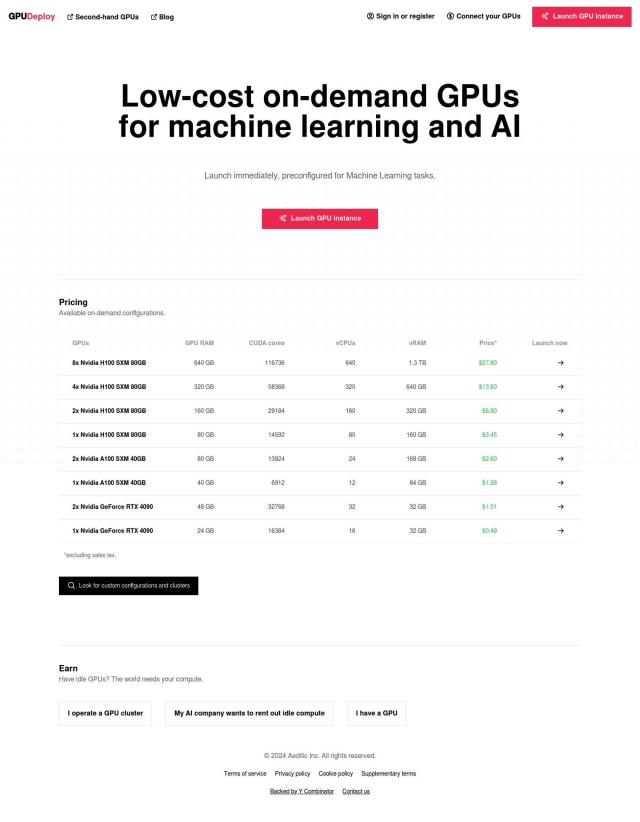

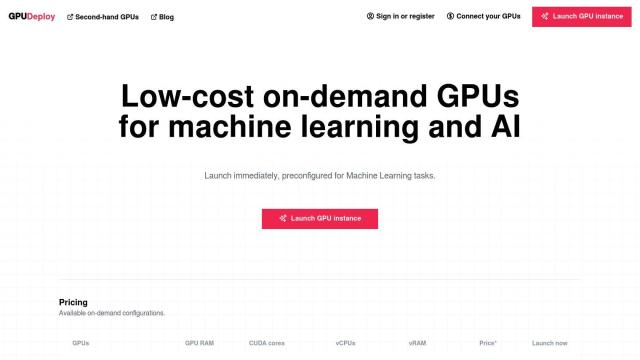

GPUDeploy

Another option is GPUDeploy, which offers on-demand, pay-by-the-minute GPU instances tuned for machine learning and AI work. You can pick from a range of preconfigured instances, including combinations of up to 8 Nvidia H100 SXM 80GB GPUs, depending on your project needs. The service is good for developers and researchers who can tap into idle GPUs, and it's got a marketplace to buy and sell used GPUs.

Salad

If you're on a budget, check out Salad. The service lets you run and manage AI/ML production models at scale using thousands of consumer GPUs around the world. It's got features like scalability, a fully-managed container service and multi-cloud support. With a starting price of $0.02 per hour, and deeper discounts for large-scale usage, Salad is a good option for GPU-heavy workloads.

Anyscale

Last, Anyscale is another powerful service for building, deploying and scaling AI applications. It supports a broad range of AI models and can run on a variety of clouds and on-premise environments. With features like workload scheduling, heterogeneous node control and GPU and CPU fractioning, Anyscale can help you optimize resource usage and cut costs. It also comes with native integrations with popular IDEs and a free tier with flexible pricing.