Question: Is there a one-stop AI stack that integrates with popular tools like LangChain and GitHub Copilot for seamless development?

Keywords AI

For a full AI stack that dovetails with tools like LangChain and GitHub Copilot, Keywords AI is a strong contender. It's a single DevOps platform for building, deploying and monitoring LLM-based AI applications. It can handle hundreds of concurrent requests without incurring a latency hit, can be easily integrated with OpenAI APIs, and has a playground and prompt management interface for testing and iterating on models. Built-in visualization and logging through pre-built dashboards, performance monitoring and data collection and fine-tuning help you squeeze out of your models, and it's geared for AI startups.

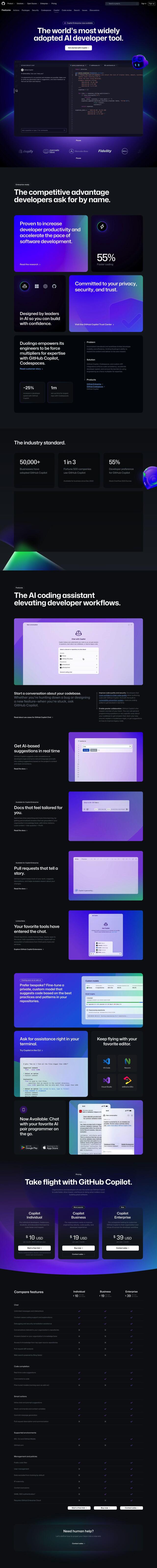

GitHub Copilot

Another top contender is GitHub Copilot, an AI-powered developer tool that offers context-aware help at every stage of the development process. It can offer code completion, chat help and can be integrated with other tools and services like log error checking and cloud deployment. GitHub Copilot works with multiple IDEs and terminals and is trained on natural language text and source code from public sources, so it's easy to use in whatever workflow you have.

LangChain

LangChain is another good option for developers who want to build and deploy context-aware, reasoning applications. It spans the LLM application lifecycle, from creation to deployment, and offers tools like LangSmith for performance monitoring and LangServe for API deployment. LangChain can consume multiple APIs and private sources, so it's a good fit for financial services and tech companies where maximizing operational efficiency and product improvements are important.