Question: Can you recommend an API that provides rate limiting, caching, and request prioritization for generative AI and serverless environments?

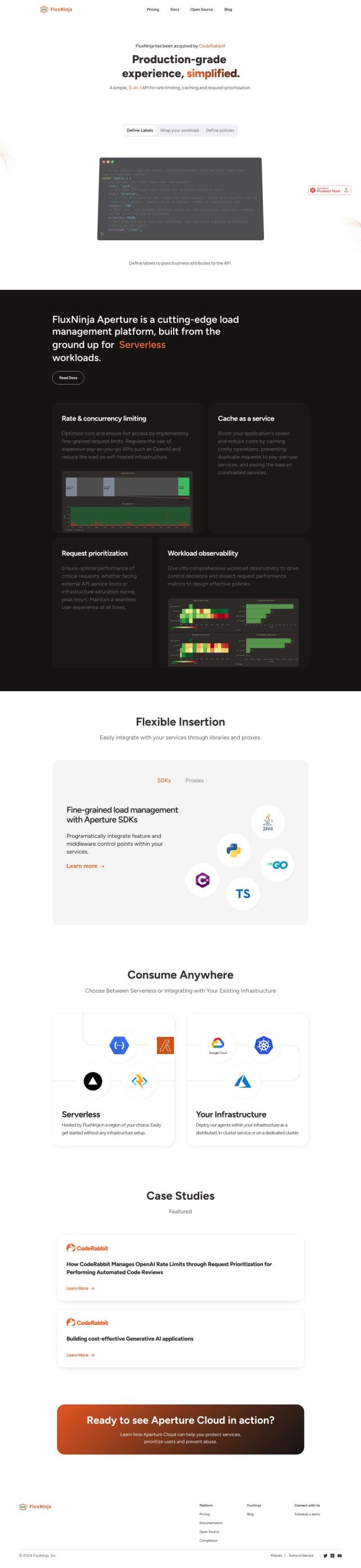

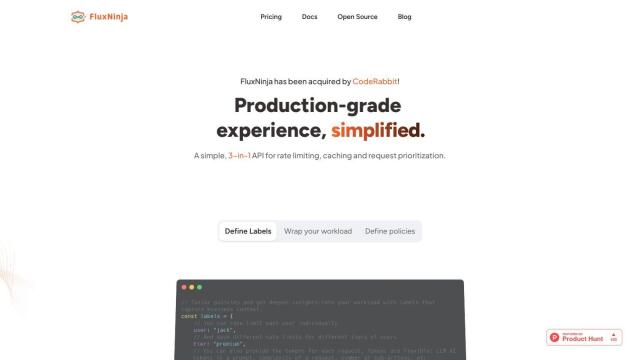

FluxNinja

If you're looking for an API that offers rate limiting, caching, and request prioritization for generative AI and serverless computing, FluxNinja is worth a look. This 3-in-1 API is designed for production use and offers fine-grained rate and concurrency limiting, cache as a service, request prioritization and workload observability. It can be inserted flexibly with libraries and proxies, so it can be used in a variety of situations. FluxNinja has strong security protections, including SOC 2 Type I compliance and third-party audits, to help protect data and privacy.

Anyscale

Another contender is Anyscale, which bills itself as a way to develop, deploy and scale AI applications more efficiently. It's geared more toward performance and cost optimization, but it also offers features like workload scheduling, smart instance management and GPU/CPU fractioning for better use of resources. Anyscale supports many different AI models and comes with integrations with popular IDEs, persisted storage and Git for larger enterprise use.

Keywords AI

For a unified DevOps platform, Keywords AI offers a single API endpoint to control many large language models (LLMs). It can handle hundreds of concurrent requests without latency, integrates with OpenAI APIs, and offers tools for performance monitoring and data collection. Keywords AI is designed to make the AI development process easier, letting developers concentrate on building products instead of worrying about infrastructure.