Question: Is there an AI platform that can help me develop custom models to detect emotional states in audio and video data?

Hume

For training your own models to recognize emotional states in audio and video data, Hume provides an AI platform. It includes an Empathic Voice Interface (EVI) to understand and mirror human prosody and emotions in speech, an Expression Measurement API to capture subtle vocal and facial expressions, and a Custom Model API to build application-specific insights. The platform is geared for AI research, social networks and health and wellness, offering the best emotional intelligence in the business.

Valossa

Another interesting option is Valossa, which uses multimodal AI for video analysis. It can automate tasks like transcription, captioning and video mood analysis. With a user-friendly portal and lightweight API for integration, Valossa is geared for video-heavy industries like media and entertainment that want to automate some tasks and get new insights.

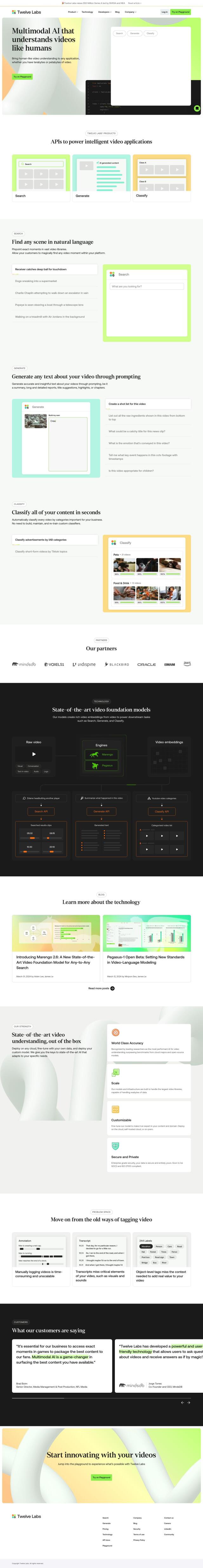

Twelve Labs

If you want a platform that works for video and audio, Twelve Labs has a multimodal AI option. It offers APIs for fast search, text generation and classification of large video libraries, using large video foundation models that can handle a variety of data. The platform's flexibility and customization abilities make it a good option for large-scale video and audio content analysis.

Novita AI

And Novita AI offers a full-stack AI platform with APIs for image, video, audio and Large Language Model use cases. Among its abilities are text-to-image, voice cloning and advanced text-to-speech. It's a general-purpose tool for many projects. With flexible pricing and a focus on data security, Novita AI can be used for a variety of AI-based projects.