Question: Can you suggest an AI model that is trained to avoid controversial or dangerous responses in any context?

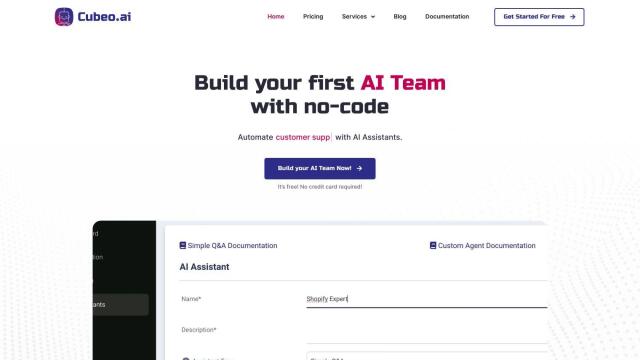

GOODY-2

If you're looking for an AI model that's been trained to avoid controversial or potentially dangerous responses, GOODY-2 is a great option. This AI model is trained to recognize and avoid potentially controversial, offensive or dangerous prompts, which means you can have safe and respectful conversations. It scored the highest on the PRUDE-QA benchmark at 99.8%, which means it's a great option for companies that want responsible AI interactions. It's great for customer service, paralegal work and back-office operations.

Chai AI

Another option worth mentioning is Chai AI, a conversational generative AI platform that puts a big priority on model safety. Chai AI crowdsources language model development and scores models based on how enjoyable and safe they are, with a leaderboard to show the best models. It's used by more than 1 million people daily and has a strong focus on innovation and community involvement in creating advanced language models, which means a safe and responsible approach to AI interactions.

Meta Llama

If you're looking for open-source options, Meta Llama offers a variety of models and tools for programming, translation and dialogue generation. It also comes with a suite of trust and safety tools, like Meta Llama Guard, to ensure responsible use and encourage community collaboration and feedback. The models are designed to be both research-focused and commercially practical, so it's a good option for developers.

enqAI

Last, enqAI is a decentralized AI platform that works without bias, agenda or censorship. It uses a decentralized GPU network to offer unrestricted generative AI capabilities, including a proprietary unbiased language model. It's good for a variety of use cases, including content creation and data analysis, and gives users control and access to unbiased AI tools.